When The Internet Just... Stopped: The Cloudflare Outage That Broke Half the Web

Cloudflare, the company that literally keeps about 20% of the internet running - decided to have what we in the industry call a "very bad day." And when Cloudflare has a bad day, *everyone* has a bad day.

When The Internet Just... Stopped: The Cloudflare Outage That Broke Half the Web

November 18, 2025 - You know that feeling when you wake up, grab your morning coffee, open Twitter (sorry, X), and instead of your usual doomscrolling feed, you get slapped with a 500 error? Yeah, that was everyone's Monday morning today. Well, technically Tuesday for those of us not in denial about what day it is.

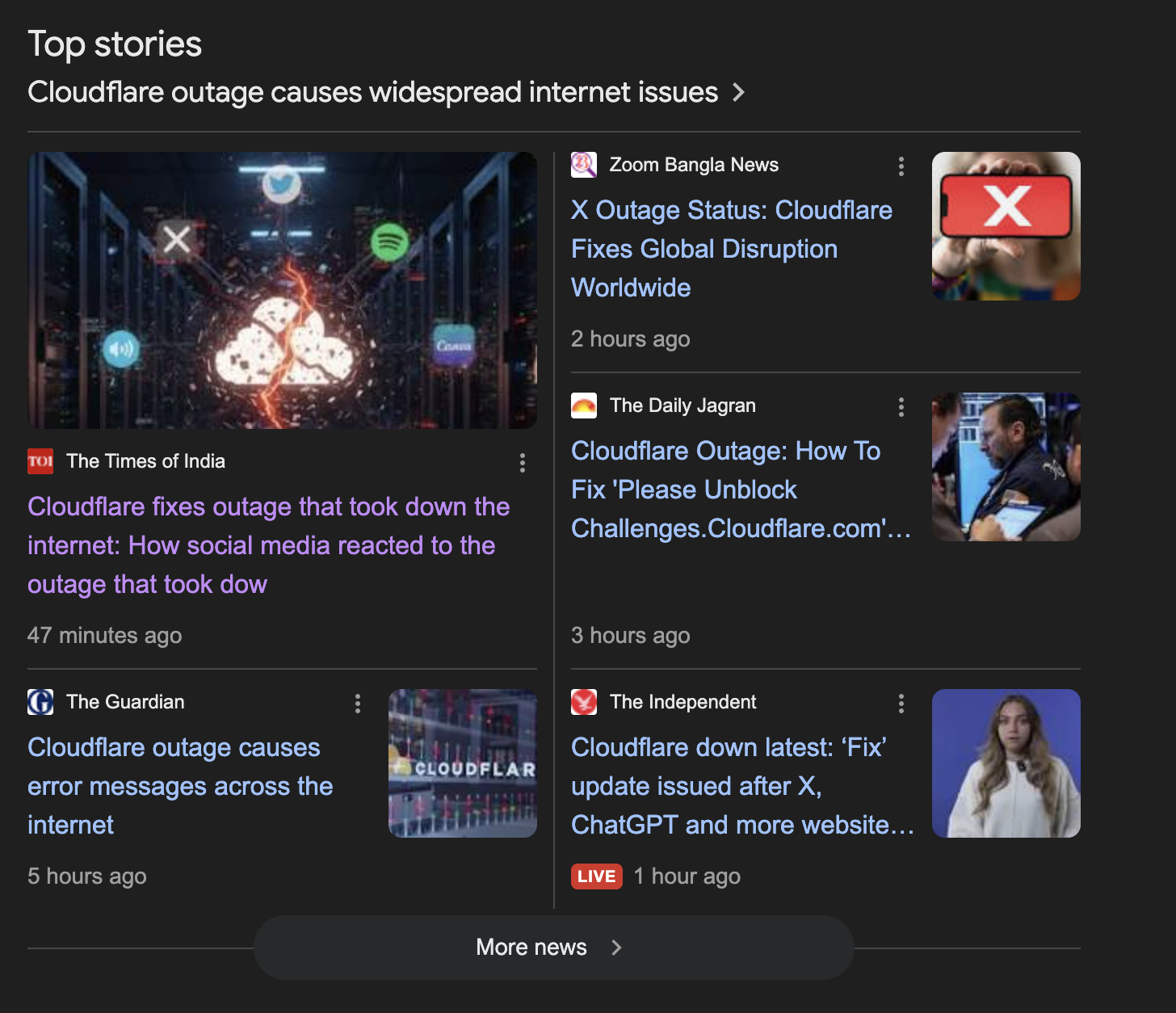

If your internet stopped working this morning, if ChatGPT suddenly went silent mid-conversation, if X was down, or if literally any of your favorite sites decided to collectively take a nap - congratulations, you experienced the latest episode of "How the Entire Internet Depends on Like Three Companies."

What Actually Happened?

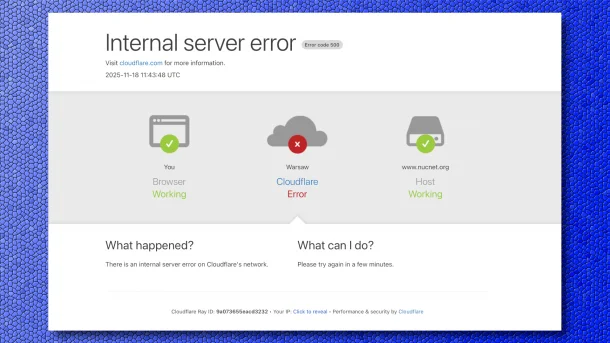

Around 7 AM Eastern Time (11:20 UTC for my fellow cloud nerds), Cloudflare - you know, that company that literally keeps about 20% of the internet running - decided to have what we in the industry call a "very bad day." And when Cloudflare has a bad day, everyone has a bad day.

The root cause was an automatically generated configuration file used to manage threat traffic that grew beyond expected size, which triggered a crash in the software system. Translation? A config file got too chunky for its own good and took down the house of cards.

But here's where it gets interesting. Cloudflare's CTO later explained it was actually a latent bug in their bot mitigation system that crashed after a routine configuration change. A latent bug - that's code for "this thing was waiting in the shadows like a villain in a horror movie, and we had no idea it was there until it struck."

The irony? Cloudflare's main job is protecting websites from DDoS attacks that are meant to knock them offline. Today, they basically DDoS'd themselves. Wild.

The Domino Effect Was Real

Look, I've been doing cloud engineering and MLOps for a while now, and watching these outages unfold is like watching a masterclass in single points of failure. When Cloudflare's network started throwing widespread 500 errors, it was chaos.

Here's a partial list of what went down:

X (because calling it Twitter makes me sound old): Peak outage reports hit over 9,700 people screaming into the void

ChatGPT and OpenAI: Even the AI overlords weren't immune, with intermittent access issues

Uber and Uber Eats: People couldn't order food. Like, imagine that horror

Spotify: Users couldn't access their music, which in 2025 is basically a human rights violation

League of Legends and Valorant: Gamers worldwide experienced the true meaning of rage

McDonald's self-service kiosks: Because apparently even Big Macs need cloud infrastructure now

DownDetector itself: The ultimate meta-failure - the site we use to check if things are down... was down

And here's my favorite detail: Even nuclear plant background check systems (PADS) were impacted, meaning visitor access to nuclear facilities was temporarily unavailable. Nothing says "modern infrastructure" quite like your nuclear security being dependent on the same service that keeps Twitter running.

The AWS and Azure Connection

If this feels familiar, that's because it is. This outage comes around a month after Amazon Web Services also took much of the internet offline. Before that, we had Microsoft Azure going down. It's like watching a relay race, except instead of passing a baton, we're passing around catastrophic failures.

Here's the thing that keeps me up at night as someone who works with these platforms: AWS, Azure, Cloudflare - they're not competitors in this story. They're all critical infrastructure. When people say "the cloud," they really mean "someone else's computer." And increasingly, that "someone else" is just... three companies.

The entire web depends on just a handful of companies, and when these giants experience an issue, the whole of the internet starts to crumble. We've built the digital equivalent of putting all our eggs in three very large, very sophisticated baskets. And sometimes, those baskets trip.

How Long Did This Nightmare Last?

The good news? It wasn't forever. Cloudflare identified the issue and implemented a fix, believing the incident was resolved around 14:42 UTC (9:42 AM ET). So we're talking about roughly 3 hours of chaos. In internet time, that's approximately seventeen eternities.

By mid-morning, most services were coming back online. X started loading again (whether that's a good thing is up for debate), ChatGPT resumed its role as everyone's AI therapist, and people could finally order their McMuffins through an app like God intended.

Cloudflare's CTO issued a pretty straightforward apology: "I won't mince words: earlier today we failed our customers and the broader Internet". Respect for the honesty, honestly. No corporate speak, no "we're experiencing technical difficulties." Just straight up "yeah, we messed up."

The Technical Deep Dive (For My Fellow Nerds)

So what really happened under the hood? Cloudflare observed a spike in unusual traffic around 5:20 AM ET, which triggered this whole cascade of failures. But it wasn't an attack - there's no evidence this was the result of malicious activity.

Instead, it was that classic combination that makes every engineer's heart sink: automated systems + edge cases + routine maintenance. Cloudflare had scheduled maintenance happening in multiple datacenters (Santiago, Atlanta, Sydney), and somewhere in that mix, a configuration that should have been fine... wasn't.

The bot mitigation system - the thing that's supposed to protect against automated attacks - had a bug lurking in it. When they made what should have been a routine config change, that bug woke up, looked around, and decided to burn everything down. The configuration file grew larger than expected, the system couldn't handle it, and boom - cascade failure across the global network.

This is what we call a "fun" postmortem in the making.

What This Means For All of Us

Here's my hot take as someone who deals with cloud infrastructure daily: this is going to keep happening. Not because these companies are incompetent - they're not. Cloudflare, AWS, Azure, they're run by incredibly smart people with sophisticated systems. But complexity is the enemy of reliability, and modern internet infrastructure is complex.

Every time we add another layer of abstraction, another microservice, another automated system, we're adding potential failure points. And when you're operating at the scale these companies operate at - serving billions of requests per second - even a 0.01% failure rate is catastrophic.

The dependency on these platforms is only growing. Your doorbell? Probably uses AWS. Your smart fridge? Azure. Your ability to tweet about your smart fridge being broken? Cloudflare. We've built a world where everything is connected, and the connections all run through the same few pipes.

The Silver Lining?

If there's anything positive to take from this, it's that these outages are forcing conversations about resilience and redundancy. Every time the internet goes down, every CTO and engineering team has to ask themselves: "What's our backup plan? What if our primary CDN goes down? What if our cloud provider has issues?"

Smart companies are investing in multi-cloud strategies, building in redundancy, and designing systems that can gracefully degrade rather than catastrophically fail. The keyword there is "gracefully" - because right now, when these systems fail, it's more like a swan dive off a cliff.

Also, there's something weirdly reassuring about the fact that we can identify these issues, fix them in a few hours, and get everything back online. Imagine explaining this to someone from 1995: "Yeah, the entire internet stopped working for three hours because a configuration file got too big, but we fixed it before lunch."

You may also like

How 2026 AI Will Leave You Behind If You Do Not Learn It

AI is quietly becoming the brain behind your apps, workflows, and future job. This article shows how OpenClaw, Moltbook, and agentic AI are changing what engineers do and nd why learning AI engineering now protects your career.

Real World Use-Cases of Software Engineering Principles in Spotify and Netflix

Understand how Spotify's Discover Weekly uses collaborative filtering while Netflix combines multiple algorithms to create personalized experiences for hundreds of millions daily.

AI Automation's Impact on Entry-Level Tech Hiring in 2026

As AI automation reshapes the tech job market by 2026, entry-level roles will evolve dramatically. Discover how aspiring developers can adapt and thrive in this changing landscape by focusing on skills that complement AI technologies.